About Fugue

Go faster in the cloud - without breaking the rules

Fugue helps teams move faster in the cloud—without breaking the rules that keep cloud environments secure. Enterprise organizations and fast-growing startups using the cloud at scale and operating in regulated industries use Fugue to ensure the security and compliance of their cloud environments while they focus on innovating.

Cloud teams use Fugue to secure the entire cloud development lifecycle—from infrastructure as code through the runtime—with the same platform and rules across AWS, Azure, and Google Cloud. This removes significant time, cost, and risk from cloud operations, giving companies the confidence to move faster in the cloud.

And Fugue is the only cloud security company that guarantees rapid time to value and lasting success for our customers: Get a complete and actionable cloud compliance assessment and interactive visual map of your security posture in 15 minutes—and bring your environment into compliance in 8 weeks.

Our Leadership

Josh Stella Co-founder & Chief Executive Officer

Josh Stella is Co-founder and CEO of Fugue, the cloud infrastructure automation and security company. Fugue identifies security and compliance violations in cloud infrastructure and ensures they are never repeated.

Previously, Josh was a Principal Solutions Architect at Amazon Web Services, where he supported customers in the area of national security. He has served as CTO for a technology startup and in numerous other IT leadership and technical roles over the past 25 years.

David Mitchell President and Chief Operating Officer

David Mitchell is the President and Chief Operating Officer of Fugue, where he brings more than 30 years of enterprise software experience. Early in David’s career, he was part of a software start-up in the help desk automation space as the VP of sales and eventually CEO, negotiating the company’s sale to McAfee. David was recruited to webMethods as the VP of worldwide sales, where he helped grow the company to more than $200M in annual sales and the largest IPO in Washington, DC region history. When the founder and CEO of webMethods temporarily retired, David was recruited to be CEO, leading the company to profitability, returning growth to the top line, and negotiating the merger of webMethods with Software AG. Over the past 13 years David has been involved as CEO, COO, SVP of worldwide sales for a number of software companies including Appian, Global360, VersionOne.

Richard Park Chief Product Officer

Richard, Fugue’s Chief Product Officer, is a tech executive with nearly 25 years of experience in product management, product marketing, and security and network engineering. He loves to help startups by scaling product management and product marketing teams from the ground up and define a company’s “North Star” in direction and focus. Richard oversees product and engineering functions at Fugue.

He previously served as VP of Product Management for ZeroFOX with responsibility for all product management activities ranging from concept and vision, to strategic planning, to product definition and execution. Over nearly 4 years, Richard helped build out the enterprise customer base from 2 to over 200 customers and the product management team from a single PM to 5 PMs and UX designers.

Richard holds MBA and AB degrees from Harvard University and an MS in Computer Science from Johns Hopkins University.

Jennifer Troxell Chief Marketing Officer

Jennifer Troxell, Fugue’s Chief Marketing Officer, brings 25 years of SaaS marketing experience with measurable results at some of the biggest brands in the technology space. Most notably, Jennifer led the go-to-market strategy and execution for integration leader webMethods, which was acquired by Software AG, as well as OpenText’s BPM business unit following a successful acquisition of Global 360. Most recently, Jennifer led Marketing Demand for Appian, the leader in low-code software. Throughout the last 10 years Jennifer has also provided consulting to many early stage tech companies. She is both a strategic thinker and tactical planner who takes a sales- and buyer-centric approach to marketing. Jennifer graduated from Penn State University and enjoys spending time with her four kids and dog.

Andrew Wright Co-founder & Chief Evangelist

Drew has worked at the intersection of digital media, marketing, and grassroots communications for nearly 20 years.

Prior to co-founding Fugue, he was the founder and CEO of Grasshop, a startup that provided a communications platform for organizations to engage their members and stakeholders in issue advocacy.

Since 2002, Drew has worked with teams developing websites and applications. He provided digital strategy for a wide range of clients as a freelancer, following six years at FleishmanHillard and Burson-Marsteller.

When not focused on Fugue, Drew is an active musician performing in and around the Washington, D.C. area. He received his MBA from The George Washington University School of Business.

Curtis Myzie Vice President of Engineering

Curtis, Fugue's Vice President of Engineering, has over a dozen years of experience in software development and engineering management at product-focused companies. He is always looking to help the Product team ship valuable product improvements day in and day out.

At Fugue, Curtis helped build the company's SaaS offering as a Principal Engineer before moving into his current role. Prior to joining Fugue in 2018, Curtis served as CTO of Advanced Simulation Technology, Inc. where he specialized in realtime audio and communications hardware and software for professional flight simulators and other training systems.

While away from the keyboard, you might find Curtis hanging out with his two boys, working with them on various kid-sized engineering projects. Curtis holds a BS in Computer Science from the University of Virginia.

Ankush Khurana Vice President of Customer Success and Solution Architecture

Ankush Khurana, Vice President of Customer Success and Solution Architecture at Fugue, is a seasoned IT service delivery executive with over 15 years in SaaS and Cloud with startups and enterprise organizations. His true passion for customers, technology and "hands-on" leadership ensures solution architecture and support teams seamlessly deliver on customer happiness. Most importantly, his knowledge and passion for technology helps to drive a customer's business goals from the very first contact and delivers a world class experience.

Prior to Fugue, Ankush was instrumental in building and leading the global Professional Services Integration and Implementation practice at Angel.com. The team was responsible for enabling customers as the company grew from 15 SMB customers to over 300 including several F500 companies before being acquired by Genesys in 2013.

Christopher Suen Vice President of Product

Chris Suen is VP of Product at Fugue, where he brings more than a decade of experience building fast-growth startups and investing in software and internet companies.

Chris previously led business operations and global development at Bridge International Academics, an edtech company that has scaled its learning platform and schools across Africa and Asia, and was on the technology venture capital team at New Enterprise Associates, where he invested in more than a dozen companies including Care.com, Groupon, ScienceLogic, and Sprout Social. Chris received his MS in Management Science and Engineering from Stanford University and BA in Economics from Harvard College.

Tyler Mills Vice President of Sales & Alliance

Tyler Mills brings to Fugue more than 15 years of experience developing and leading high-performing enterprise sales teams and building alliance ecosystems. Most recently, Mills was Senior Vice President of Global Alliances at Lease Accelerator, where he led a go-to-market team through a significant revenue growth phase. Mills brings extensive experience working with enterprises and fast-growing software startups to unlock value creation opportunities.

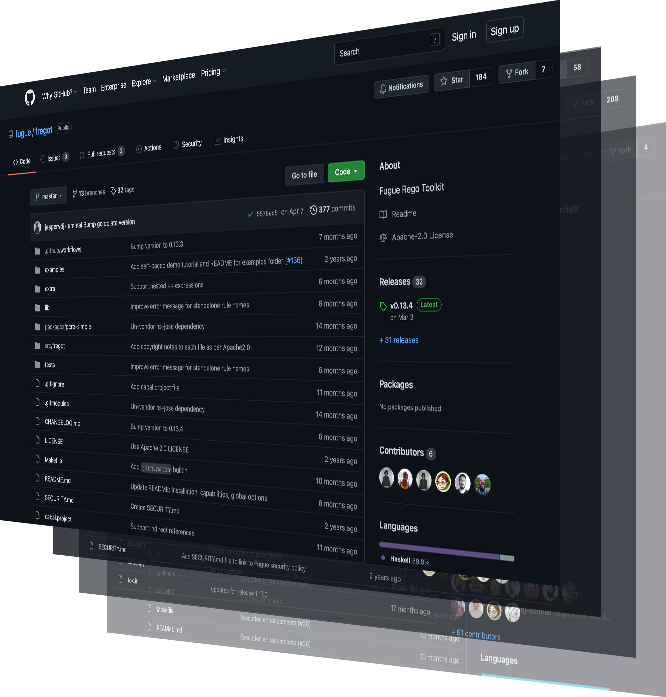

Our Commitment to Open Source

Here at Fugue, we’re big believers in the power of open source for scalable, secure software development and cloud engineering. We are active contributors to projects such as Terraform and Open Policy Agent, and we are members of the Cloud Native Computing Foundation.

Our engineering team maintains a number of open source projects including:

Regula: a tool for securing infrastructure as code, including support for Terraform and CloudFormation. Regula is built on Open Policy Agent and integrates with Conftest and other DevOps tools

Fregot: a lightweight set of tools to enhance the Rego (the Open Policy Agent query language) development experience, enabling users to easily evaluate expressions, debug code, test policies, and more

Credstash: a tool for managing credentials in the cloud using AWS KMS and DynamoDB

Zim: a caching build system for teams using monorepos

Contact Us

Headquarters

47 E. All Saints St. | Frederick, MD 21701

D.C. Office

1800M St. Suite 501N | Washington, DC 20036

Join Our Team

We're in the middle of a generational change in technology from on-premise data centers to cloud computing. As with any emerging technology, security is critical. Organizations will only adopt technologies if they are confident that they are protected against malicious users and data breach risk.